Context #

I was in the process of migrating data from one zpool (hikari) to another (vault). The goal was to rebuild the former with optimized settings, such as changing the ashift value and removing the atime property to enhance performance and longevity. Some options I considered are rsync, zfs send | receive or zfs clone.

- rsync: although rsync was a safe option, I opted against it because it didn’t provide the learning experience I was looking for in this migration process

- zfs send | receive: I wanted to try

zfs send | receivedue to its efficiency and the opportunity to deepen my understanding of ZFS’s advanced features - zfs clone: since I hadn’t read into zfs clone at the time, I didn’t borther considering it as an option yet.

In hindsight, investing time in understanding and experimenting with zfs clone may have provided a safer and more controlled approach to the migration.

What went wrong #

The now-dead zpool history below tells the whole story. I added some narratives so you can see the 5 stages of grief:

2024-05-06.22:57:12 zfs snapshot vault/test@1057

2024-05-06.23:02:56 zfs snapshot vault/test@1058

2024-05-06.23:07:04 zfs snapshot vault/test@1059

2024-05-06.23:09:27 zfs rollback -r vault/test@1057

2024-05-06.23:11:19 zfs destroy vault/test

2024-05-07.20:28:02 zfs send hikari/books@2024-05-07 | sudo zfs receive -F vault <---- This is when I FUBAR

2024-05-07.20:28:02 zfs receive -F vault

2024-05-07.20:32:32 zfs receive -F vault/books

2024-05-07.20:33:38 zfs rollback vault/books@2024-05-07 <--- Denial.

2024-05-07.20:35:05 zfs rollback vault@2024-05-07

2024-05-07.20:41:00 zfs rollback vault@2024-05-07 <--- Anger & bargaining. Rollback in futililty.

2024-05-07.20:44:33 zfs rollback vault@2024-05-07

2024-05-07.21:11:47 zfs destroy vault/books@2024-05-07

2024-05-07.21:17:50 zpool export vault

2024-05-08.11:22:55 zpool import -a -d /dev/disk/by-id/wwn-0x50014ee2c03741b6-part1 -d /dev/disk/by-id/wwn-0x50014ee2158c233a-part1 <--- Acceptance.

This command

2024-05-07.20:28:02 zfs send hikari/books@2024-05-07 | sudo zfs receive -F vault

sent the snapshot hikari/books@2024-05-07 and forcibly receives it into the zpool vault. The -F flag (–force) is significant here because it forces the receive operation, which includes rolling back the file system to the most recent snapshot before the receive, thus overwriting the existing data on vault.

The lessons #

1. Repeat after me: NEVER. TINKER. IN. PRODUCTION! It is pure stupidity to experiment in a production environment. I knew it before, but I never really intuite it until now. The fact that it was a homelab, which often straddles the line between experimentation and critical services, only helped blur the line further and eventually lead to my demise. So it’s essential to establish and adhere to a clear boundary.

2. Understand the nature of homelab: it is a mix between testing and production env. A homelab often serves dual purposes: it’s a place to experiment and learn, but it may also host essential services. Recognizing this dual role means the following steps can be taken to reduce stupid mistakes:

- Segment the environment: clearly define and separate the environments within homelab; use different storage pools, network segments, or even physical hardware to differentiate between production and testing areas.

- Backup: regularly back up critical data following 3-2-1 principle. Treat homelab with the same level of diligence you would apply in a professional environment, ensuring that data loss can be quickly mitigated.

- Documentation and Planning: Document the lab environment and any planned changes. This practice not only helps in preventing mistakes but also in understanding and recovering from them if they occur.

Well, at least now I can have an answer for that sysadmin interview question: “What’s your biggest fuckup?”. Could be something like

In my homelab, where I juggle both testing and running some essential personal services, I once made the classic blunder of forcefully receiving a ZFS snapshot into a production zpool, accidentally wiping out critical data. That stupid mistake taught me a lesson I won’t soon forget: never make untested changes directly in a production environment. To avoid repeating this nightmare, I set up a clear divide between my testing and production systems, started doing regular backups, and began meticulously documenting every change. This hiccup didn’t just teach me about risk management and problem-solving—it turned me into a more cautious and competent sysadmin.

3. Create zpool with device ID is a much better practice than

Just like in fstab, the right way to add disks to a zpool is by using /dev/disk/by-id/XXXX. These entries are unique to the drives themselves and will not change when you move disks to a different controller. The enumerated device names (i.e. /dev/sda, /dev/sga) as they will change when you add/remove devices from the system.

Don’t be like me, when I exported the striped zpool consists of two separate disks, I couldn’t import it back for some reason. Doing this to import properly:

- Run

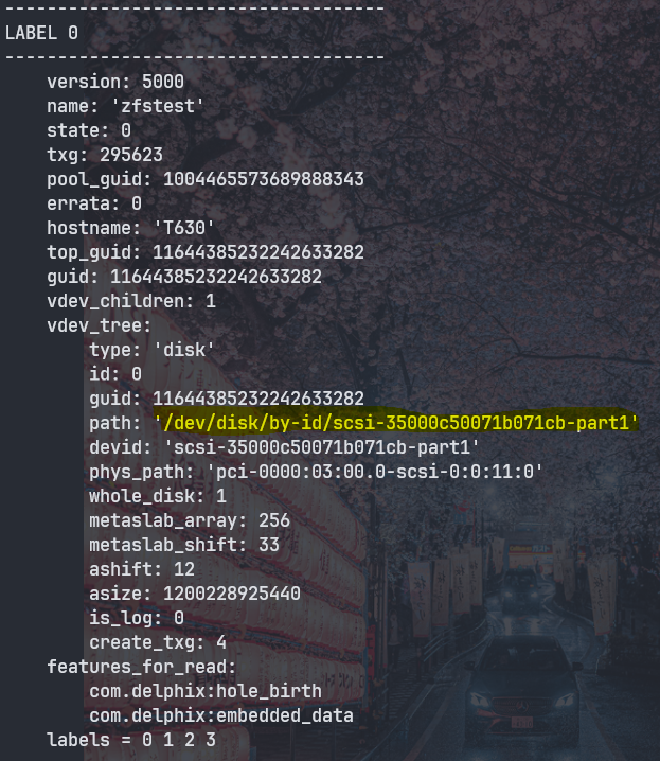

zdbon the partitions that used to make up the zpool

zdb -l /dev/sdh1

zdb -l /dev/sdi1

- Get the paths of both partitions, such as

/dev/disk/by-id/wwn-0x50014ee2c03741b6-part1

- Then import both partitions automatically (

-aflag, no need to remember pool name)

sudo zpool import -a -d /dev/disk/by-id/<path1> -d /dev/disk/by-id/<path2>

Doing this will also change the device nodes.