Apr 2023: rekindled #

- Bought 2 ebooks but they were locked with password, forcing me to use proprietary software to read, so I couldn’t read locally. While trying to crack the pdf password with hashcat + rockyou, I realised my life-long interest in general IT, hacking, tinkering etc. (i.e. I only ever stayed up until 4am to either play computer games or tinkering with tech-related stuffs). Found out more about digital forensics, computer forensics. Very interesting!

Jun 2023: planning my way forward #

- Heard that one cannot get straight through DFIR but should start somewhere like SOC analyst, sysadmin

- Learned from reddit & confirmed through Seek that DFIR job is not popular in Australia – switch target to an entry position in sysadmin

- Learning plan & roadmap created

- Went through the first few lectures of several general computing courses that seemed to compliment each other: Harvard CS50, NYU Computer hardware & OS… all parallel, hopefully one area can help explain/enhance understanding of the other

- Read more great blogs post about starting career, pillars of skills… Went through A+ videos, went deep into subnet mask, subnetting, wrote 1st blog post, looked for grad cert/grad dip in comp sci in local universities with CSP to save fees

Jul 2023: first VPS running OpenBSD #

- Setup an openBSD VPS on Vultr to store sensitive data on a detached storage, enable passwordless SSH with key pair

- Learned how secured and robust OpenBSD is in multiple applications such as webserver (this blog is running on one), build simple router & firewall

- Received a raspberry pi zero – thinking of building pihole, see notiapoint.com

Aug 2023: starting homelab, VMs #

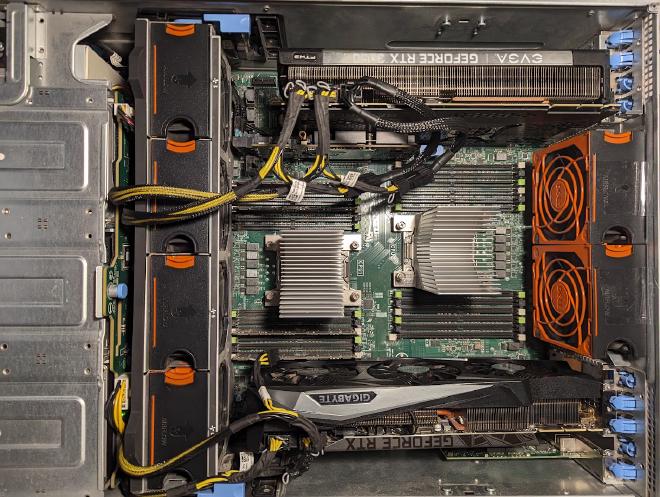

- Bought an old Dell T630 server & an old Cisco Catalyst 3750 switch - started tinkering

- Learned what lifecycle managment is, what iDRAC is, and how it’s used to remotedly manage the server

- Learned to update firmware, BIOS

- Learned about different types of hypervisor

- Got free VMWare’s ESXi 8.0 license to run baremetal

- Learned about difference between normal HDD and SAS HDD, different RAID array types, FreeNAS/TrueNAS and ZFS compared to other FS

- Setup a 5xSAS RAID5 array

- Learned to boot from image using vFlash

- Decided to install ESXi on vflash card instead of any existing drive

- Spun up first VMs, learned about thick and thin provision

- Got shell to ESXi and learn some basic

esxclicommands - learn to attach a USB as VMFS datastore - Learned to configure

vmnicand let ESXi and VMs connect to the internet

Sep 2023: upgrading homelab #

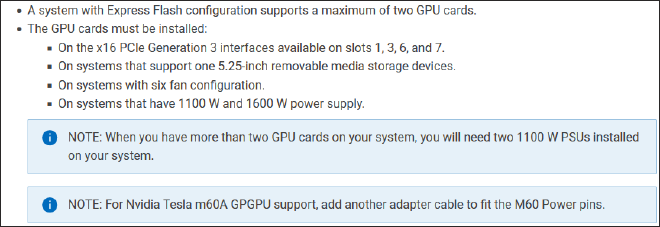

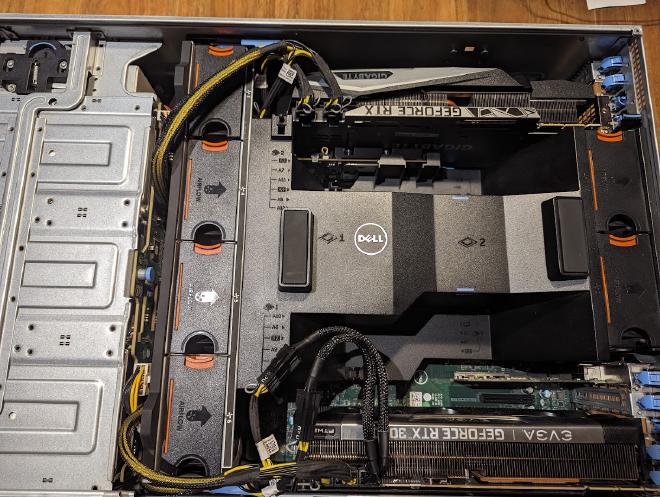

- Got 2 GPUs and more powerful PSU (1600W) to accommodate extra load

- Learned that I need a power supply extension board & cables kit to power 2 GPUs

- Learned the different power cable connectors 6-pin vs 8-pin… and learned that I can merge 2 6-pin connectors into 1 8-pin connector to power the 2nd GPU

- Learned from the manual that I need the 2nd CPU in order to run the 2nd GPU. Also realised that I installed the 2nd GPU in the wrong, low-priority PCIe slot (slot 7), that’s why it can’t be seen by OS

- Had to mod the “wind tunnel” shroud to fit the oversize 2nd GPU in slot 6, network card went to slot 7.

- Purchased & install 2nd CPU (Intel Xeon E5 2660 V3)

- Learned about FreeBSD while trying to setup pfsense VM - tried installing FreeBSD OS including X.org desktop environment for fun

- Completed building the server with 2 x GPUs

- Fixed fan overspeed issue when installing 2nd GPU (kids!! can you hear me??) Upgraded BIOS, firmware of PSU along the way.

- Setup Samba for filesharing within home network with other windows machines

Oct 2023: setup home network #

- Switch ISP and installed OpenWRT on an old Netgear wifi router as a main router, replacing the previous ISP-provided Archer C1200

- Learned that I need rollover cable to connect to setup the Cisco switch

- Learned that the switch had SFP ports and need (expensive) GLC-T 1000BASE-T transceivers to fit RJ45 connectors of CAT6 cables (part # 30-1410-03 or 30-1410-02)

- Run CAT6 cable on the attic connecting ISP-provided router with homelab switch (got myself covered in fiberglass…)

- Learned how to SSH into AWS instance running CentOS 7 using both AWS Cloudshell and PowerShell 7 in work computer. Started to get how SSH and its key pair work in cloud environment.

Nov-Dec 2023: tried running local AI #

- Naturally got sidetracked by machine learning, local AI etc. 2 GPUs was great for that purpose!

- During that time learned about

gitandgit lfs - Tried to passthrough GPU to Ubuntu VM for local AI finetuning - struggle with finding the right driver/CUDA/kernel version combo to utilise both GPUs. Installing CUDA Toolkit by reading the (outdated) doc is a pain in the ass.

- No success in getting Ubuntu VM in ESXi to see the 2nd GPU… the different drivers on ESXi and VM OS started to convolute the whole process.

- TIL I can use

watch -d -n 0.5 nvidia-smito watch GPU usage or any other output. - Took a break from local AI

Jan 2024: looked into cloud #

- As advised by a closed friend working in IT, started looking into adding cloud into skill mix. Came back to interest in sysadmin

- Wrote a post about which cloud to choose

- Went through MS Learn Azure Fundamentals in 3 days

- While backing up by simply copying the LLMs I downloaded (~400GB) to the backup volume, I realized there’s a lot of large objects in

git/lfs/- will try to see if I can remove these safely. Still have a very vague idea how git works in general - Uninstalled ESXi as VMWare got acquired by Broadcom and free licenses got pulled. Might have to comeback to older (pirated) ESXi versions. Reckon it should be enough for linux sysadmin and cloud learning purposes.

- Found myself increasingly interested in sysadmin and infrastructure side of IT. Frequently found myself staying up til 2am, wide awake and excited!

Feb 2024: kept learning linux admin, Docker #

- Ran Ollama with

deepseek-coder:33bto help speed up learning process. Super helpful! - Got an APC UPS - learned that it shouldn’t be connected to anti-surge powerboard

- Learned about C13/C14 power cable and these are needed to connect my UPS to my server’s PSUs

- Learned to add second domain to httpd webserver, and create SSL certificate with Let’s Encrypt to enable https

- TIL Docker Desktop is different from Docker CE, using different builder, having different context; installing Docker Desktop will disable docker engine daemon etc. and how to switch between the two in the CLI.

- Tried to run a hashcat docker container (making use of NVDIA GPU) failed, with the following error - might be a permission issue. Will try to learn how to run docker Desktop as root, or rootless mode

(HTTP code 400) unexpected - failed to create task for container: failed to create shim task: OCI runtime create failed:

runc create failed: unable to start container process: error during container init: error running hook #0:

error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy' nvidia-container-cli: initialization error: load library failed:

libnvidia-ml.so.1: cannot open shared object file: no such file or directory: unknown

- Created a volume on the 5 x SAS disk RAID5 array to store the backup the server using

fdisk. Finally understood that “mount point” is simply a folder where you access the entire disk partition (something I failed to understand clearly as a long-time Windows user) - Created a shell script to automate/schedule this backup with cronjob - learned about the difference between

tarvsrsync. I’ll still have to learn to use both, thoughrsyncseems particularly fit my needs for now. - Finally be able to run hashcat docker as root from CLI. It’s really an issue with permission.

- Installed

zfsutils-linuxand created a 14TB zpool spanning across 2 SATA disks as another place to store backup. Must write an article to compare these 2 options. - Setup tailscale on work computer and homelab for remote access. Learned about

/etc/resolv.conf, why it gets overwritten on WSL and how to usesystemd-resolvedas a DNS manager. Finally intuited how tailscale and, similarly, cloudflare work now. Also started to intuit the concept of shell and shell within shell. - Ubuntu booted up extremely slowly. TIL a handy tools to diagnose like

systemd-analyze blameorcritical-chainor passing whole boot process into a nifty chart withsystemd-analyze plot > bootchart.svgis particularly helpful for visual learners like me. - Finally intuited Docker context. Uninstalled Docker Desktop to move to Docker CE, working on CLI entirely. Learned to save Docker Desktop images to tarballs and load them back into CE. Learned to organise docker directory. Created first .yml file and spun up a container from it. Started to get the concept of IaC now.

- Spun up my first KVM VM running openBSD. Learned that setting up console to shell into the VM can be tricky. I don’t understand enough about serial console etc.

- TIL that due to the cost of bandwidth with Aussie ISP, Cloudflare CDN in free plan actually reroutes traffic overseas before sending it back to host, defeating the purpose of a CDN

- Realized that rsync only is not enough in my use case, as I will tinker and break a lot of stuffs. Better to have historical backup of the machine to roll back to. Created another bash script to backup the system using tar this time for version control under a form of multiple tarballs. Will pair this with git eventually. Learned that trailing

/after directories is different (at least in tar), and tar usually run at the local dir, so if you want to zip files etc. from other dir, use-C <dir>- and this particular flag is order sensitive as it corrects all following flags - Spun up a container running alma linux base. Took a long time. Will try to install Foreman,not Foreman + Katello for simplicity’s sake while learning (only learned recently that they are totally different beasts.) Maybe will have to use VM here instead of container, but I’m slowly building my knowledge foundation up.

- Alma linux: to view all opening ports:

ss -tulpn(make sure to have iproute package installed first) - Encountered “Could not get default values, cannot continue” running

foreman-installer. Log shows:

Error: The parameter '$slowlog_log_slower_than' must be a literal type, not a Puppet::Pops::Model::AccessExpression (file: /usr/share/foreman-installer/modules/redis/manifests/init.pp, line: 426, column: 15)

- Downgrading puppet as suggested here

- Encounter different error

Forward DNS points to 127.0.1.1 which is not configured on this server

Output of 'facter fqdn' (T630.lan) is different from 'hostname -f' (T630)

- Set hostname domain as suggested here.

the solution is to create a fully qualified domain domain name (FQDN). I did it this way (src >26; also answered here 42):

Change the server IP to a static one. I reserved the IP address in the router and left the server in DHCP mode; one might apply a static address on the server itself.

Set a fully qualifed host name, like foreman.example.com using the hostnamectl set-hostname foreman.example.com command.

Edit (append) the /etc/hosts file to redirect the host name of the server (step 2) to its IP(step 1); one can use this command: echo “192.168.1.50 foreman.example.com” > /etc/hosts (of course, change the IP and the hostname).

- Got error

System has not been booted with systemd as init system (PID 1). Can't operate.

- Destroy the container to use different image: almalinux:8-init Doesn’t work. Don’t want to delve too deep into container yet. Decided to dual boot Alma linux 8 on 2nd SSD (virtualisation host profile).

Mar 2024: KVM KVM KVM #

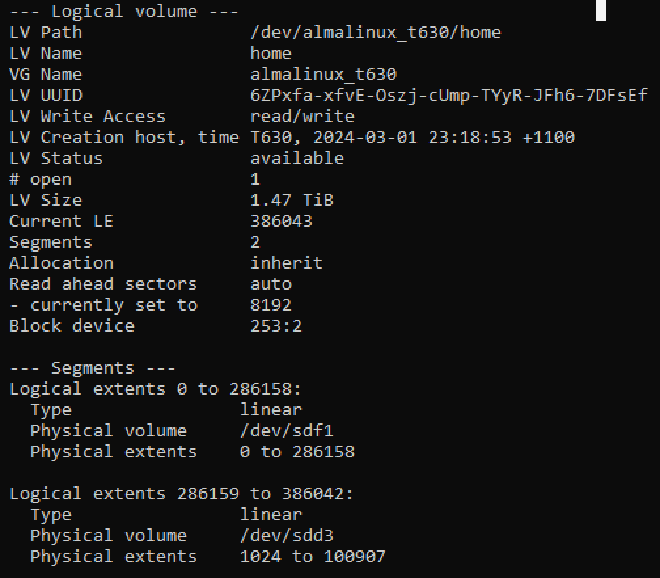

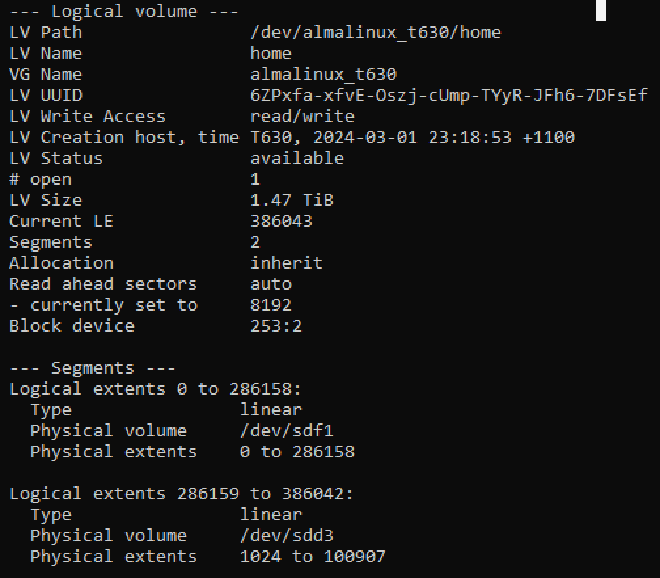

- During installation of Alma, learned more about LVM; comparing xfs and ext4. Very interesting! Noticed that Alma Linux create a logical volume (LV) by default for

/homespanning across 2 disks, which made me nervous becaues I don’t know how to assign/hometo a separate disk like I did before.

- Read a lot of discussion and advice about linux admin. Started to move away from Ubuntu and more into Enterprise Linux ecosystem. Probably only use Ubuntu for fun running LocalAI etc.

- Successfully installed Foreman on the Thinkpad T480 running Alma Linux workstation. Still don’t understand the architect of Foreman interacting with the future hosts.

- Installed Alma linux 8 but with virtualization host profile. Very interesting and daunting to purely use CLI to manage the server, but this is what I’m in for.

- Setup Cockpit web GUI. Access it remotely from Window machine. This is just like ESXi!! Then I realized I gotta run Podman instead of Docker for container, because the two doesn’t work in the same machine. May have to defer learning about container until I got a full grasp of KVM? I don’t know why I still shy away from Proxmox. Maybe because it seems to be an easy way out from ESXi?

- I need Alma linux iso to spin up KVM VMs, which is not stored in the current drives of the newly installed Alma. It’s locked behind the zfs pool of ubuntu, not on a separate drive that can be mounted. The pure CLI environment means I can’t cheat with GUI

5/3

- Learned to edit

/etc/fstabto mount the drive. But the iso was not in there- Get partition UUID with

lsblk -f - Edit

/etc/fstab: addUUID=<partition UUID> <mount point path> <fs type> defaults 0 2- use UUID here instead of device path/dev/sda1because if we swap HDD location, it’ll still use the right drive - Create mount point as added in previous step such as

/mnt/backup-storage - Update systemd with

systemctl daemon-reload - Mount the disk with

mount <partition> <mount point>such asmount /dev/sda2 /mnt/backup-storage

- Get partition UUID with

6/3

- Trying to setup

sambaso I can copy the iso on my main windows machine to the drive attached earlier. Couldn’t get through as easy as before with GUI. Encountered multiple errors:- Can’t access the server IP at all. Turned out it’s the firewall rule in Cockpit. Added samba to the white list

- Still can’t access the server. Got

system error 1219meaning multiple connections exist to the server using the same username and different credentials. But I swear I didnt have anything connect to the server but File Explorer. Turned out it’s the network drive mapping I did earlier to assign network drive to a disk for media sharing purpose. Disconnected it, signed out and back in again fixed it. - I could see the shared folders in the IP now, but I can’t access the folder.

Error: the network name cannot be found. After a while diagnosing with pinging, changing ownership, permission or the shared dir,net useetc. I stumbled upon the/etc/samba/samba.conf.examplefile that mentioned labelling newly-created shared dir assamba_share_tbecause it is operating in Security-Enhanced Linux. By doing this, SELinux will allow samba to read and write to these dirs. What an eye-opening read!! Now I know what SELinux is lol. Fixed it by recursively modifed the dir contexts withsudo chcon -t samba_share_t -R <dir>(learned that you can’t group -t and -R together, and understand a bit more about linux commands syntax) - then restart samba service.

- TIL about the idea behind

systemdand a bit deeper into the booting process - Learned that I need to sign

zfsmodule because of secure boot. Gotta learn how to do this and write about it. (update: I did it! See here) - Installed & upgrade Ansible - realized I will need to upgrade python3.6 (RHEL8 default) to 3.9 as well

8/3

- Trying to spin up a KVM again after previous openBSD KVM, but instead of

virt-install, this time using XML file as I think it will pave way for future automation. And man it was a struggle for a 2 full nights. I was at the verge of “cheating” withvirt-managerbecause of its GUI and being more beginner-friendly. But I decided to take the hard way to understand each line of the XML file and the proper way to spin up KVMs. Here are what I learned. - Finally learned to sign zfs kernel module!! Feel really good about this! See here

- I have been living on edge as I got this in log:

Device: /dev/sdf, SMART Failure: DATA CHANNEL IMPENDING FAILURE DATA ERROR RATE TOO HIGH. I was right to be nervous. That’s why I have been avoiding using/home. Looks like time to learn to replace a disk that belongs to a LVM group.

- Got stuck at the first step move the extents off of

/dev/sdf1- the failing disk:pmove /dev/sdf1returns “No extents available for allocation.” Realized I can’t move 1TB space to 500GB eventhough there’s no file on that 1TB. Looks like I have to add a member to the group that’s big enough to store the extent. But how will I remove the member later? - Ok kinda got it sorted! Here are the steps

- Getting stuck at using cloud-init to spin up a alma linux KVM. Can’t pass the user data to the VM for some reason. Arrgh!!

11/3

- After going at it for 3 long nights I finally did it!!! Starting kvm reliably from a generic Centos stream cloud image and cloud-init. And the cause of my struggle was so stupid it’s not even funny. Time to start installing foreman. Gotta remind myself to treat the VMs from now on as cattles instead of pets.

14-25/3

- Something is wrong with Plesk at work that we cannot login via the usual route. It’s on and off in a funny way. Suspecting DNS problem. Spent 2 weeks to investigate the cause and harden the whole server, all while planning to move from Centos to Alma Linux. This is a good chance to have a fresh start without a mess from previous admin (nginx auto-restarting every 5 secs made log analysis a pain in the hind). Found an image from Plesk that seemed to make the entire thing much less painful.

- Finally able to use Terraform to spin up some EC2 instances as well as local VMs. Now some more practice before adding Ansible on top for config. Maybe I can skip Foreman for now(?)

- Installed webmin in parallel with Cockpit

- Finally got dual GPU run stably on Alma Linux with the right driver and kernel etc. This is such a pain in the ass as documents all over the place. Learned to undervolt the cards to haver better inference performance while saving power! See it here

Apr 2024: ZFS, backup, and f***ed up #

- TIFU while migrating files to new zpool because I was distracted. Lost quite a few books. Luckily having backup in other media helps. Learned much more about ZFS’ zpool, dataset, scrub, snapshot, ashift etc. and how I must be extra focus at this stage of learning while working on this kind of production-lab hybrid environment, which is the nature of homelab. Will have to practice backing up and restoring many many more time, with rsync, with tarball, and with ZFS snapshot.

- TIL about systemd timer, a bit deeper about shell script, dot files, customising shell. Might move to

zshsoon, and will learn to usetmux, and learn to automate a lot more tasks other than backup and scrub. Also added MFA into SSH login - TIFU again, this time much bigger than yesterday. Lost 15 years of media library. Hurt so much not because of the data but because it seems the lesson yesterday didn’t sink in fast enough. I was too foolish to try migrating data from one zpool to another with

zfs send | receivewithout clearly understanding it or dryrunning. Painful lessons learned here

May 2024: automate VM and cloud deployment #

-

Learned to use terraform to deploy local VMs with libvirt provider. Also learned that I can login to guest VM remotely with

virt-viewer -c qemu+ssh://<username>@<hostname>/system <vm name>. Super excited to be able to access my VMs from anywhere! -

Whelp, after rebooting the Centos Stream 8 VM spun up with clouinit a month ago, I realized I can’t login through console again. I can only shell in as the default user, but then I can’t change password because current password is wrong. Shelling in or login with root is fine (but that’s not best practice). That’s when this part in the cloud-init documentation made sense: “Most cloud-init configuration is only applied to the system once. This means that simply rebooting the system will only re-run a subset of cloud-init.”. I might be able to ignore this problem for now, but still, gotta read deeper about cloud-init.

-

Learned that we can pass password’s hash instead of plain text thanks to this line in cloud-init doc: “Specifying a hash of a user’s password with passwd is a security risk if the cloud-config can be intercepted. SSH authentication is preferred”

-

Turned out the problem above is not a problem but actually a feature. After all, password auth is frowned upon, so as long as I can shell in with my SSH key, I’d consider it a win.

-

Learned a bit deeper about files and directories permission. Recalled that a few months ago while trying to contained a malware on plesk

(alfabypass.php), I though I was being smart to remove execute from all files AND directories in . Naturally, all of the websites styling are broken, leaving only barebone HTML. Gotta rollback the whole server in shame, because I didn’t know which default permission to restore for which file/dir. Fun and scary experience. -

The more I learn and read and listen about Linux and its administration, the more I want to be good at this!! I think I found the one job that I want to do even without pay.

-

TIL about Single Event Effects (SEE) that can cause single bit flip in computers that can lead to desvastating consequences. Now I understand more the role of ECC RAMs and how fragile technology can be

-

Although I’ve been comfortably deploying local VMs with

terraformbut it has come to the point where I might need to reconsider this approach compare to Foreman Katello, or Vagrant, as it looks like terraform is better to provide cloud instances thanks for more robust providers. Deploying local VMs on the other hand, solely rely on this single provider which can be limited in terms of functionality. In other word, a simple way to provide local VM can just be a bunch of .xml files andvirsh, and a more complex way is through Vagrant or Foreman, each of which has their own pros that seem to outweight terraform. -

Also I’ve been actively avoiding using any GUI for the admin tasks, as I think it doesn’t help with my understanding of how the system work under the hood. One click can mask so many command lines that I need to learn to understand the mechanism.

-

Learned more about SELinux and how to read, add and remove fcontext labels etc.

-

Learned to use logrotate to preserve .bash_history, which is really important during this stage of learning.

-

Some nifty handy scripts:

diff -qr . data | grep ' differ'getting a list of corresponding files whose content differssed 's/\r$//'- search for carriage return char and replace with an empty string. Very useful for cleaning up the text files created in Windowswatch -n 1 iostat -xy --human 1 1- handy when you need to monitor disk activityiftop -i eno0- monitor network activity on specific network interfacess -ltpfor i in *.mp4; do ffmpeg -i "$i" "${i%.*}.mp3"; done- convert all mp4 files in dir to mp3 with ffmpegfind . -type f -name *.jpg -exec rm {} ;/- find and remove all .jpg file, add flag-sto specify sizessudo dmidecode --type 17orsudo lshw -short -C memory- display RAM info (type and speed etc.)

-

Today I’m one more step closer towards deploying multiple VMs locally with static IP using 1 single

main.tffile, after a lot ofterraform applyanddestroy. I’ve been trying to deploy 2 VMs, one Fedora and one RHEL-based with static IP. Initially, I got permission denied error where libvirt couldn’t read the newly-created libvirt_volume. From experience, I guessed it could be that kvm-qemu doesn’t have enough permission, or the file permission of the parent folder where the libvirt pool locates, or some SELinux issue with it. After some troubleshooting, turned out it’s the simple problem of parent dir permission. Setting it to 775 instead of 770 helps. It’s a bit weird because this kind of problem didn’t pop up last time I run terraform. -

Then I can deploy the VMs, but none of them get the static IP I assign in the

main.tffile. So I read around and found out that I had to specifyqemu_agent=truefor the VMs to grab the static IPs. It kind of worked, but for some reason only the Fedora VM got static address, while the RHEL VM only have IPv6. So I thought maybe it has to do with the cloud image itself, so I switched from RHEL8 to Centos8. Didn’t work either. I mean at this point, I was thinking of maybe I can just log in and manually config IP address withnmcli. After all it’s about getting the VMs up and running, not HOW you do it. But well, I can’t live with it! -

So I tried providing different config of cloud-init’s network config for each of the VM in

main.tffile, thinking that maybe because QEMU guest agents in the VMs didn’t start properly, and we can assign the IP with cloud-init (still not sure how cloud-init works under the hood though, must find out). And this time, the Fedora VM didn’t get static IP!! Ha! We’re getting somewhere. So the problem is not with the RHEL-based image but maybe the order which terraform + libvirt provide and config the VMs. -

So finally I tried providing 2 totally separate cloud-init injections for each VM. And it worked!!! I was exhilarated!

-

While trying to setup IdM (FreeIPA) and Foreman, I just realized that I don’t know anything about DNS server and what happen under the hood when a machine request a domain name. All my DNS knowledge is limited to changing the zone file on my registrars’ platforms. Felt like hitting a brickwall because this topic is certainly hard and worth diving deep into.

Jun 2024: CLI syntax, DNS, basic infra setup #

-

TIL the exact behaviour of

--excludeflag of rsync. The problem is when I was trying to exclude a specific dir, it still show up in the backup destination.rsync -arv --exclude=/home/user/excluded-dir /home/user destination/From the man page: if the pattern starts with a / then it is anchored to a particular spot in the hierarchy of files, otherwise it is matched against the end of the pathname. This is similar to a leading ^ in regular expressions. Thus “/foo” would match a name of “foo” at either the “root of the transfer” (for a global rule) or in the merge-file’s directory (for a per-directory rule).An unqualified “foo” would match a name of “foo” anywhere in the tree because the algorithm is applied recursively from the top down; it behaves as if each path component gets a turn at being the end of the filename. Even the unanchored “sub/foo” would match at any point in the hierarchy where a “foo” was found within a directory named “sub”.

-

Basically, slashes matter to specify the root of transfer.

-

If you append a slash to the end of the source directory

rsync -a /home/user/ destination, the root of the transfer is user dir, and rsync will copy the contents of user dir. -

If otherwise no slash at the end,

rsync -a /home/source destination, the root of the transfer will be home dir, and rsync will copy the whole user directory itself to the destination. This determines what the root of the transfer will be in order to inform the exclude expression. -

Then within the root of transfer, to match the excluded dir

/home/user/excluded-dir/, the filter path must be/user/exclude-dir/. Also a filter pattern ofexcluded-dir/would match that directory anywhere in/home/user, even/home/user/subdir/subdir/subdir/excluded-dir/. Syntax matters so much in Linux.

3/6

-

Today is the day I feel like after a lof of building up and tearing down and frustrating troubleshooting virtual network issues around the kvm/qemu/libvirt/cloud-init/terraform/ansible stack, I can safely say that I have achieved a small milestone, not too different from a kid who has learned to walk safely, or an apprentice who has learned to use the basic tool properly. At this stage, I can:

- Reliably spin up a proper virtual network and machines using Terraform and corresponding OS cloud image

- Inject/modify user info, SSH keys, and run some command on first boot with cloud-init

- Create basic Ansible inventory file and group & run adhoc commands on multiple VMs

- Manage VMs with

virshfor basic tasks such as snapshot, revert, and editing XML files if needed - Manage files & directory owner, group, permission and SELinux fcontext following least-privilege principle

- Create a basic backup and recovery plan following 3-2-1 principle, using

rsyncandtar - Automate backup and maintenance shell script using crontab, timer

- Understand multiple RAID configs

- Create partition and filesystem on new disks, edit

/etc/fstaband mount the filesystem - Setup basic network connection with

nmcliandnmtui - Basic tmux, enough to get by without opening multiple shell windows

- Setup SSH connection to VM/cloud instance with VPS such as tailscale

- Use

rsyncandscpto transfer file remotely - Setup Samba fileshare server & manage access

- Learn to combine commands such as

find,grep,awk,sedtogether, and make use ofhistoryfor more efficient administration - Troubleshoot most problems with the right Google-fu of the error message (I dread the day when I encounter a problem that I can’t find the answer online)

- Be able to dive deeply into a topic to learn enough to be productive with it in a short time

-

Also more importantly, I have had several revelations and realisations that, albeit small, were intuited so quickly that if I don’t write them down, I will no longer remember why it caused me so much confusion in the beginning. Things such as:

- libvirt default network virbr0 shouldn’t be touched or configured manually, instead we should leave it for when creating/modifying the virtual network

- when specifying static IP address with terraform, it actually go add the static lease to teh virtual network instead of changing the VM’s NIC config itself

- many other tools will have to rely on an agent running on the host machine to perform tasks. Such can be qemu agent, or EC2 connect, or cloudflare tunnel or tailscale

- aware of the endless possiblity and strength of shell script, be it bash or python or powershell, combined with regex, for files manipulation and system management

$ echo "I love sushi, sushi is great!" | sed 's/sushi/hotdog/' I love hotdog, sushi is great! $ echo "I love sushi, sushi is great!" | sed 's/sushi/hotdog/g' I love hotdog, hotdog is great! $ sudo netstat -anp | grep ::80 tcp6 0 0 :::80 :::* LISTEN 4011/apache2 $ sudo netstat -anp | grep ::80 | sed 's/.*LISTEN *//' 4011/apache2 $ hello="Hello, world!" $ echo $hello Hello, world! $ unset hello $ echo $hello $ du -hs ~ 100G /home/me $ myhomedirsize=$(du -hs ~ | awk '{print $1}') 100G $ y="cats dogs bears" $ for x in $y ; do echo "I like $x" ; done I like cats I like dogs I like bears $ for x in cats dogs bears ; do echo "I like $x" ; done I like cats I like dogs I like bears $ cat lines.txt | while read x ; do echo $x ; done x equals 0 x equals 1 x equals 2 -

Setup a VM as DNS server for lab virtual network using BIND. Learned much more about zone file than I did in the past. Now the whole lab network will use this DNS server.

-

Setting up a DNS server also teach me how a system prioritise resolving hostname:

/etc/hosts>NetworkManager>/etc/resolve.conf -

Learned about monolithic libvirt daemon vs modular daemons after encountering some hiccups with

libvirtdnot starting after adding more disks to LVM -

Had to rebuild the zpool to add 2 more disks to improve redundancy, going from raidz1 to raidz2. This is the third time but I learned so much more from past experience. Now each dataset has proper recordsize, xattr, atime and compression. Also the whole pool has a SSD partition as ZIL to improve performance.

-

Learned about proxmox HA cluster and Ceph storage. Eager to try so much that I got 4 mini PCs to start a cluster and build my own opsense box on top. These will run applications that are benefited from HA in the context of home usage, mostly network services like DNS DHCP, firewall or VPN…

Jul 2024: Proxmox cluster, Opnsense, DNS again, podman #

- Setup proxmox cluster with 3 x ThinkCentre M910q mini PCs, upgraded them with a bunch of old laptop RAMs and nvme drives. Going to build a 4th one with a 4-port NIC to be an opnsense or NVR box.

- Moved main server to a new location, rewire all cables in homelab. Learned to crimp RJ45 connectors & keystone. Cable management. Prepare to bring home a 12U rack + patch panel + PDU for proper management

- Started to intuit OSI 7 layers when troubleshooting Cisco managed switch not “switching” after chaning port due to the move above. Should write a post about this.

- Restore and patched work website after being hacked

- Something is wrong with tailscale DNS (100.100.100.100) as I domain names doesn’t get resolved. Disable magic DNS and changed to

systemd-resolvedand use previous DNS fixed it. Gotta read a bit more intosystemd-resolvedto see how to control it properly. - Dabble with Postgres trying to run Miniflux, Paperless…

- Getting used to container (mainly podman) more and understand a bit more after running some in production (Deluge, Jellyfin, Paperless and Miniflux), particularly in SELinux environment

- Learned about setting up dynamic DNS with Cloudflare API via a nifty shell script. Will carefully explore this to expose some service to the internet just for fun.

- Successfully built and deploy an opnsense box with a Lenovo M720q, PCIe riser card, 4-port NIC and proxmox cluster. Minimal down time. On the very evening that Crowstrike bricked all MS systems. Also learned about the existence of ML2 interface - a propriatery PCIe of Lenovo.

May 2025: Paperless-ngx and the *arr suite #

- Got Paperless-ngx up and running in podman containers

- Got the *arr suite running in podman containers

- Finally understood how secure SELinux is with its very granular control of different permission a process can do. When spinning up a container for a service, it’s normal to run

ausearch,audit2allowandsemodulemultiple times - Started homelab documentation

Jun 2025: #

- Got this SELinux log

time->Thu Jun 12 12:11:00 2025

type=PROCTITLE msg=audit(1749694260.318:37410): proctitle="deluge-web"

type=SYSCALL msg=audit(1749694260.318:37410): arch=c000003e syscall=2 success=no exit=-13 a0=7f1e72bcbe50 a1=88241 a2=1b6 a3=0 items=0 ppid=3478698 pid=3479230 auid=4294967295 uid=1000 gid=1000 euid=1000 suid=1000 fsuid=1000 egid=1000 sgid=1000 fsgid=1000 tty=(none) ses=4294967295 comm="deluge-web" exe="/usr/bin/python3.12" subj=system_u:system_r:container_t:s0:c19,c504 key=(null)

type=AVC msg=audit(1749694260.318:37410): avc: denied { write } for pid=3479230 comm="deluge-web" name="web.conf.bak" dev="dm-6" ino=269982244 scontext=system_u:system_r:container_t:s0:c19,c504 tcontext=system_u:object_r:container_file_t:s0:c122,c628 tclass=file permissive=0

This SELinux audit log entry shows that the deluge-web process was denied write access to a file named web.conf.bak while fcontext seems correct for my Deluge container directory, and the file itself has the SELinux label container_file_t, which is generally correct for container bind mounts.

Turns out it was because the process label and file label do not match enough under Multi-Category Security (MCS).

Process: s0:c19,c504

File: s0:c122,c628

Because of this mismatch, SELinux denied the write action due to MCS isolation, even though the file type was otherwise allowed.

Fix it by use podman Volume Mounts or –security-opt

If you’re running this container with podman, ensure it has correct access by using :Z or :z when mounting in compose yml file:

~/deluge/config:/config:Z

This tells Podman to relabel the directory automatically for the container’s unique MCS context.

- Update Node.js with

nvm- the safest and cleanest way to manage Node.js versions per user without interfering with system-wide packages:

# install nvm if not available

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.7/install.sh | bash

# reload shell config

source .bashrc

# install new node.js version

nvm install <version>

# set as default

nvm use 20.17.0

nvm alias default 20.17.0

- Use

podcast-dlto download all podcast from public or private feed:

npx podcast-dl --threads 4 --out-dir "./another/directory" --url "<feed URL>"

- Learned about

fstrim